shapeShift: 2D Spatial Manipulation and Self-Actuation of Tabletop Shape Displays for Tangible and Haptic Interaction

Alexa F. Siu, Eric J. Gonzalez, Shenli Yuan, Jason B. Ginsberg, and Sean Follmer. Presented at CHI 2018 (Paper) and UIST 2017 (Demo). [Best Demo Honorable Mention]

Background

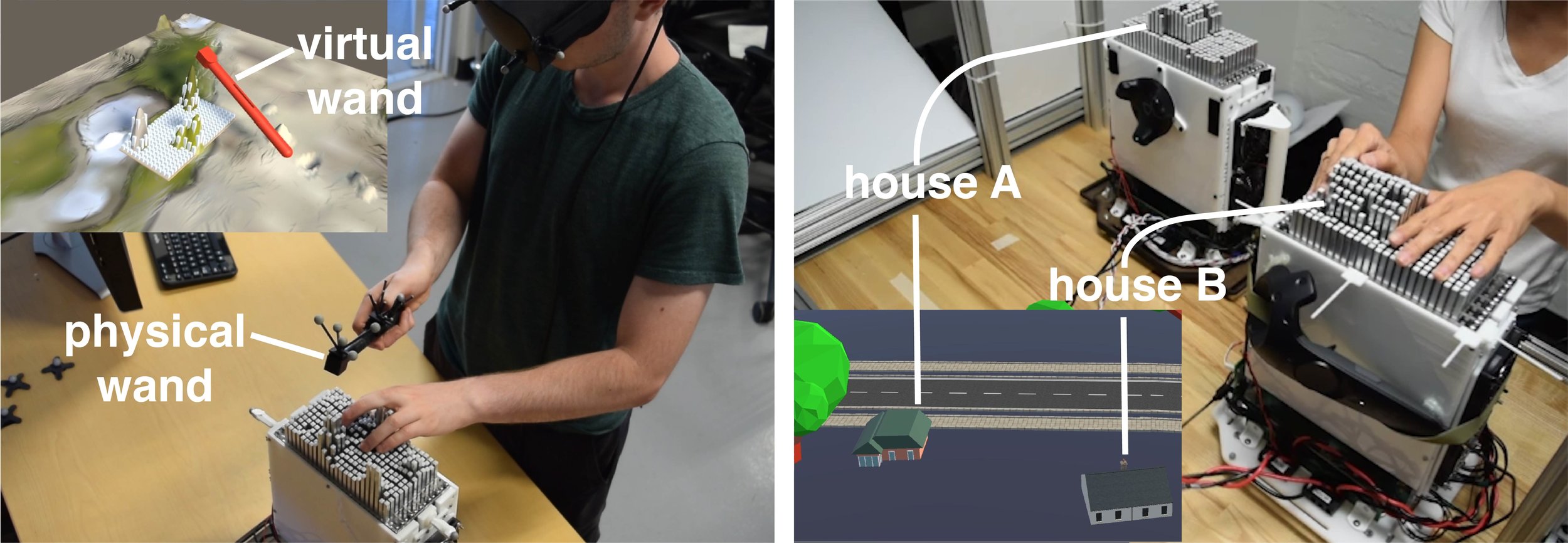

One of the biggest challenges in human-computer interaction is bridging the gap between digital and physical. Humans are adept at reasoning and interacting with physical, three-dimensional objects and tools, yet most of our interactions with computers are unable to take advantage of this due to technical challenges and limitations. Tangible user interfaces (TUIs) and haptic rendering devices have been proposed as methods to better leverage our innate spatial and understanding. Among TUIs, shape displays are a type of shape-changing UI that enable physical rendering of objects and surfaces in 2.5D. Applications for shape displays range from rapid design prototyping, to tangible feedback for VR, to assistive devices for the visually impaired. While existing shape displays have been explored for creating dynamic physical UIs, the devices themselves have typically been large, heavy, and limited in their workspace. To address these limitations and expand the existing interaction space, we proposed shapeShift: a mobile tabletop shape display for tangible and haptic interaction.

Design Goals & System Overview

The primary engineering goal of this project was to develop a new shape display that was compact, mobile, and (reasonably) high resolution. Beyond the display itself, we also needed a system capable of tracking its position in 3D space, so that the display's mobility could be fully leveraged to display and receive spatially-linked information. To this end, we developed shapeShift: a 12 x 24 array of individually actuated pins, made up of six 2 x 24 modules, mounted to a mobile platform. Each module consisted of two custom PCBs that housed all logic, sensing, and actuation for the pins. External position tracking was performed using either an Optitrack IR tracking system, or the cheaper and more commercially available HTC VIVE lighthouse trackers. Position information was then processed using a custom Unity application to determine what should be rendered by the pins.

When I joined this project in Fall 2016, much of the initial concept and protoyping had been initiated by my talented collaborators, Alexa Siu and Shenli Yuan. My responsibilities included assisting with a PCB redesign for improved position sensing, firmware/software development, communication, mechanical testing and assembly design, and implementing a mobile robot platform.

Mechanical Design

shapeShift was designed primarily for compactness, modularity, and mobility. To minimize system complexity and ensure compactness, each pin is actuated using a single powered lead screw. Each pin consists of a square, hollow aluminum tube fixed to a custom nut, which travels along a rotating lead screw. Each lead screw is powered by a small DC motor, coupled using a 3D printed shaft coupling. To sense the pin's position, quadrature tick marks on the shaft coupling are sensed using a pair of photointerruptor and integrated. The motors were chosen based on both their speed and slim form factor, which allows for dense packing of the pins. The main drawback of this actuation method is that it is not backdriveable, meaning the user can't push down or pull up the pins on their own. This makes providing closed-loop haptic feedback more challenging, since direct force-sensing is then required. While this is not yet implemented, it is an active research project in the SHAPE Lab. Friction in the lead screws also remains a challenge and requires us to perform maintenance regularly; this is due to tradeoff in torque for higher speed. We plan to address this by investing in higher precision low-friction lead screws for future design iterations.

PCBs were mounted vertically between rows to minimize space, fixed to supports on the sides of the display; this layout also allowed us to use sensors (photointerruptors and limit switches) mounted directly to the PCB surface. Each pair of rows made up an individual module that could be stacked along one dimension to increase the display size. We ultimately chose a display size (9 x 17.5 cm) based on the average max finger spread of the human hand, with the reasoning that a display would likely be used by a single hand in many applications. Laser cut acrylic grids were used to guide the pins and keep them aligned, and a 3D printed motor mount bridges the module's two PCBs on both sides, doubling as internal structural support. 3D printed covers were placed over the motors to shield the underlying photointerruptors from IR interference.

Modules were mounted directly to a base platform and could be individually removed for easy access to internal components. This base could then be swapped between a passive mobile platform (which can be smoothly pushed around on omni-caster wheels) or a omnidirectional mobile robot, which enables the display to actively follow a user's hand or provide lateral resistance, for example.

Electrical Design

To control the motors and receive commands from a central computer, each PCB was designed with four microcontrollers (MCUs) modeled after the Teensy 3.2. Each MCU controlled 6 motors via motor drivers, measured their positions using pairs of photointerruptors and a limit switch for calibration, and communicated with all other microcontrollers as well as a central PC over a full-duplex RS485 bus in a master/slave configuration. We developed firmware in Arduino (using Teensyduino) to manage the pin positions based on commanded values received from a central PC, creating a custom protocol that allows for intercommunication between all 48 MCUs. Closed-loop PID control was used to govern each pin position.

The most challenging aspect of the electrical design was learning the ins and outs of PCB design. When we first began, we ran into several issues with sensor noise, power fluctuation, and elusive shorts. However, this allowed us to gain valuable debugging skills (for both hardware and firmware), and learn first-hand the importance of things like proper capacitor selection, trace width, and via placement. We used the open source, collaborative CircuitMaker software to custom design all PCBs in-house. We also used an Othermill CNC to mill smaller batches of custom PCBs to test circuits prior to ordering.

Software & Position Tracking

One of our main priorities with shapeShift was to make it as simple as possible to render and prototype different objects, shapes, and models. With that in mind, we created a general purpose application in Unity that enables the rendering of any imported CAD model. The key to this was the implementation of a ray casting script, where pin positions were calculated by casting vertical rays from each pin location into the virtual world. When a ray collided with a virtual object, the displacement was calculated and the pin's desired position was updated, visualized on-screen in Unity, and forwarded to the physical display. The Unity application was also used to interface with the real-world tracking system (OptiTrack or HTC VIVE) and sync the real-world position of the display with the virtual environment. The application runs two additional parallel threads to handle USB serial communication. One thread communicates updates to pin position or display settings (e.g., PID parameters) with the master MCU. The second thread communicates with the omnidirectional robot when the display is mounted to the active mobile platform.

We developed multiple applications to demonstrate shapeShift, including: model rendering and manipulation, exploration of spatial terrain data, modifying terrain data in VR, and urban planning.

User Evaluation

Since shapeShift is unique in its capabilities as a mobile shape display, it was important to investigate and evaluate how this mobility affects a user's interaction with rendered content. While there are many questions to explore with such a new device, we chose to explore the benefits of the increased workspace and lateral manipulation afforded by a mobile shape display. We hypothesized the passive mobility could be leveraged to help users better learn and understand spatial data by providing additional physical context through proprioception; thus, a spatial haptic search task was selected comparing static vs. mobile displays. In this task, users had to navigate a virtual environment partially rendered by the display and locate various shapes. In the static condition, the display was fixed and a touchpad was used to scroll content across the display and thus navigate the map. In the passive condition, the display was mounted on a caster wheel platform and the user moved the display throughout a space that was spatially synced with the virtual environment as it rendered the content. Results showed a 30% decrease in navigation path lengths, 24% decrease in task time, 15% decrease in mental demand and 29% decrease in frustration in favor of the passive condition. For this study, in addition to aiding the study design and implementation, I was responsible for programming (Unity/C#) and administering a pilot study on object recognition to confirm that users could easily distinguish between the rendered target shapes.

Press

Wall Street Journal, April 25 2018. Volkswagen Brings Sense of Touch to Virtual Reality.

Gizmodo, April 27 2018. This Shape-Shifting, Pin-Headed Robot Lets You Feel Virtual Objects With Your Bare Hands

Fast Co. Design, April 30 2018. A Computer Mouse For The Year 3000.

Demo

A.F. Siu, E.J. Gonzalez, S.Y. Yuan, J.B. Ginsburg, A.R. Zhao, and S.W. Follmer, "shapeShift: a Mobile Tabletop Shape Display for Tangible and Haptic Interaction", in UIST '17 Proceedings of 30th Annual Symposium on User Interface and Technology, Quebec City, CAN, ACM (2017), demonstration. [Best Demo Honorable Mention]